- What's brewing in AI

- Posts

- 🧙🏼 How I use AI for validating decisions

🧙🏼 How I use AI for validating decisions

I used 4 reasoning models to stress-test an idea

Using multiple AIs to validate a decision

I used 4 reasoning models to stress-test an idea. Here’s how.

Was this email forwarded to you? Sign up here.

This week I used multiple AI models to validate a strategic decision.

Here’s the step-by-step method I used, which could be helpful when you want a second opinion from AI on something important.

Disclaimer: Before you apply this on life decisions: I’m using AI in ways that might lead to unintended results. Think of me as a crash-test dummy—you be the adult. (don’t forget, I’m the type of idiot who feeds my bookkeeping straight into ChatGPT.)

The decision: updating my newsletter’s headline

Here’s how I used this framework to benchmark my newsletter’s headline (pitch) against other newsletters and decide whether to ship it.

This is the final headline version I landed on. What I like about it is that it speaks to people who are interested in actually building with AI, not just reading about it. I’m confident it will attract the right people who will benefit most from what I share.

IN PARTNERSHIP WITH SECTION

ProfAI is the fastest, easiest way to learn AI. In just 60 minutes, you’ll complete fun, interactive lessons and earn your AI proficiency certificate. ProfAI delivers role-specific AI training you'll actually be able to use—so you can start applying AI at work today.

Why the change?

Since signing up for Claude alongside ChatGPT, I’ve found myself ping-ponging between them when validating certain decisions. The models don’t always seem to agree, at least not fully.

To feel more confident in my choices, I’ve come up with a more structured approach that helps me validate strategic decisions with the help of multiple AI models. (also, yes, I do like to overthink this type of stuff.)

Let’s first get on the same page about what type of decisions this approach can be useful for; practical decisions with alternatives you can line up and judge. When you’re making an important choice, with a long-term impact, under uncertainty and complexity. I really don’t want you to use an approach like this for emotional decisions, and neither does Sam Altman. Use AI as a second opinion, not life advice. Never outsource your human judgement.

To show you the method, I’ll be using the example of me validating my newsletter’s positioning here. You could use the same method on everything from evaluating which product feature to kill, deciding which markets to target, which vendors to use or which vacuum cleaner fits your needs.

The focus of this newsletter has over time shifted from purely sharing AI news to focus on how I’m personally using and building with AI. Like every brand and product, I have my own “headline”/elevator pitch on my sign-up page that tells new visitors very quickly why they should subscribe.

My previous headline (what I’m replacing)

This pitch has served me well so far, it’s clean and simple. But it feels a bit generic and vague. Also, it emphasises “AI news” right at the start. I’ll still cover big AI releases in this newsletter, but my focus going forward will be on how I’m personally using AI (content you won’t find anywhere else).

The new headline better reflects the direction in which I want to take this newsletter.

To craft the new pitch, I spent time reflecting on how to succinctly say what benefit my newsletter brings to the reader. I also looked towards other larger and successful newsletters on how they pitch their publication, and borrowed some inspiration. Each pitch typically contains a promise (benefit you’ll get as a reader, e.g. how to brew coffee like a pro) and a mechanism (how they’ll deliver that benefit, e.g. a 3-minute daily newsletter) and an element of social proof (e.g. 3,751 baristas read this).

The method: validating a choice with AI – step by step

After refining my headline (with the help of Claude), I came up with the variant I showed you initially; however, I still wasn’t sure how good it is as a pitch.

How well does it tell what the newsletter is actually about? Does it communicate how the benefit is delivered? Does it give a clear cue as to who I, the author, is? Is the social proof at the end aligned with the promise of the pitch?

Why I’m suggesting a multi-model approach

I wanted to try benchmarking my pitch against other newsletter and decide whether to use it with the help of AI.

I tried asking Claude how good the pitch was compared to a set of 7 other newsletters (mostly in the AI and tech space): the answer was that it was above average. I tried asking ChatGPT the same question (in a private chat so it doesn’t use memory) and it seemed to agree.

You probably know by now that your prompt influences the response a lot, and that AI tends to over-agree (Sycophancy). That made me question whether there was actually a consensus between models—and to what extent.

So I took a systematic approach where I gave the latest flagship models from Google (Gemini 2.5 Pro), Anthropic (Claude 4 Sonnet and Claude 4.1 Opus) and OpenAI (GPT-5 Thinking) the same task “rate and rank my newsletter pitch vs these other newsletters’”.

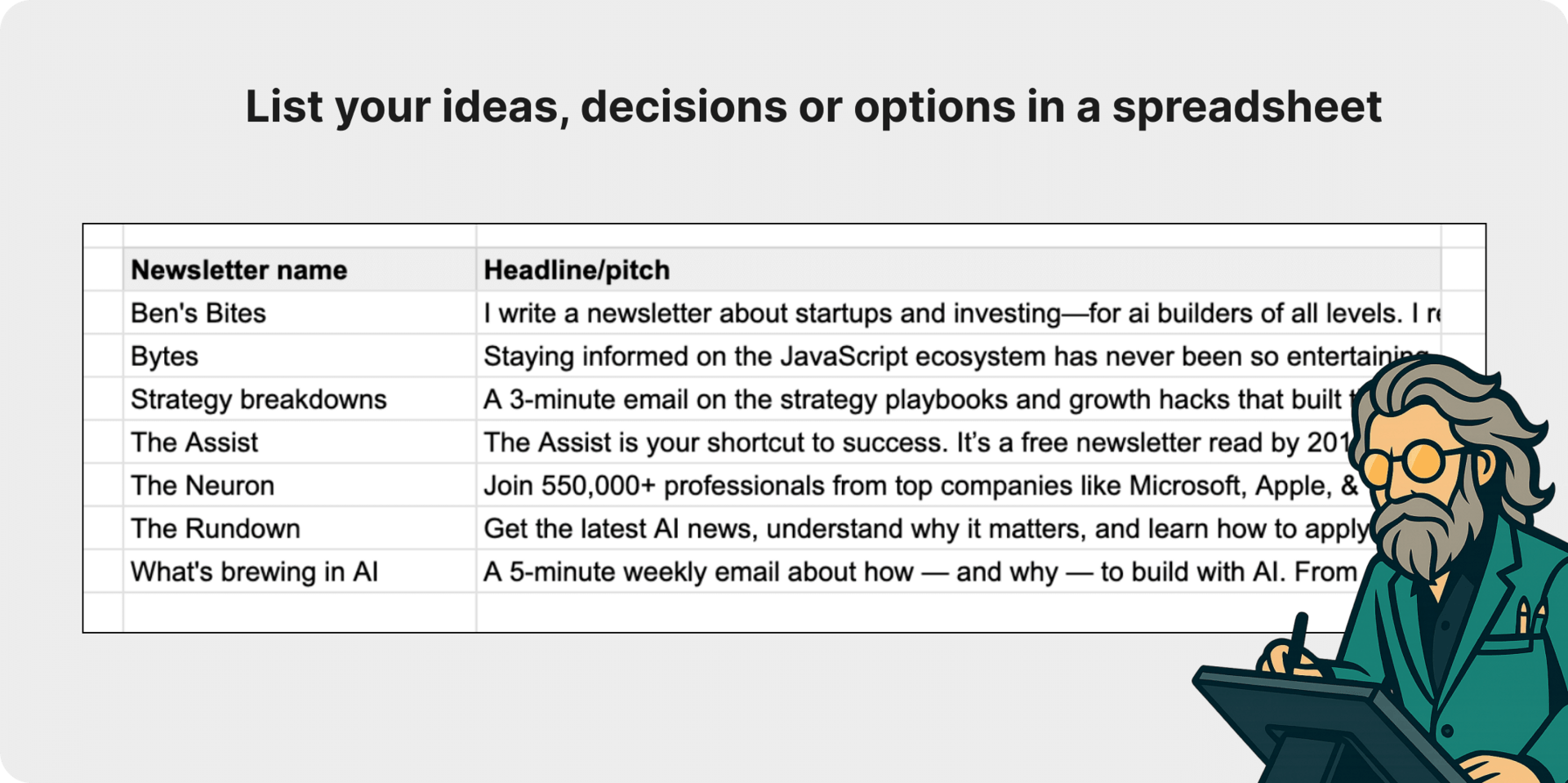

Step 1: list options + context in a spreadsheet

The first thing I did was gathering pitches from other newsletters that I wanted to compare mine against, and put them in a spreadsheet. These were my decision options.

Step 2: Prompt each model identically

Second, I gave each of my chosen AI models the same prompt, including the table from my spreadsheet.

Step 3: Record each model’s rankings

I noted down answers from each model, and calculated an average ranking for each pitch on the list. As you can see, the 4 different models all ranked my pitch differently. However, it was still ranked in the top 4 each time. The question then, was: does that mean that they agree on some level?

Step 4: Check agreement across models

I pasted a screenshot of the table to GPT-5 Thinking and asked it to analyse the table of rankings. It thought for 2.5 minutes and carried out some common statistical tests for agreement and consistency. The verdict: “there’s a clear consensus on the top and bottom”.

I ran the whole testing sequence (steps 1-4) on the final pitch twice to be sure I was getting consistent results. There was some variation in the rankings versus the first time, but overall, the statistical analysis* showed the models generally agreed on which pitches were best and worst, even if the middle rankings varied. And in both runs, my pitch came out on top.

With that answer, I felt more confident on moving forward with my newsletter’s new headline**.

❦

I think this method could be applied across many domains where you need to evaluate a decision against alternatives, and for many purposes.

If you’re making a decision collectively, for example at work, I think using this method (or something similar) could add some meaningful structure and transparency beyond “I asked ChatGPT and it agreed”.

Since many of us are already sense checking decisions with ChatGPT all the time anyway, I think a cross-checking approach like this can reduce some degree of bias and randomness, or at least give you a bit more confidence, over just using just a single model.

Important notes

A caveat that needs to be said: just because AI agrees that one choice is better than another one does NOT make it so. In the end, strategic questions like these don’t have a wrong or right answer. Use for validation and sense checking; I don’t think anyone should purely rely on AI. Your domain knowledge, peers, objective data and human judgement should probably be consulted first — then cross-check with AI.

If you’re going to try this at home, make sure you use only leading reasoning models (the ones I used, and you could add in DeepSeek if you want). Also, you want the AI to have as little information about you or the problem at hand outside of the prompt you give it to avoid introducing unnecessary bias; ChatGPT and Gemini have temporary chat options which don’t use memory. While Claude doesn’t have memory, it recently got the ability to search your previous chats, so make sure this option is toggled off.

*For the statistically curious: in my example here, agreement was moderate by Kendall’s W, and Spearman correlations showed Claude (both models) and GPT-5 were closely aligned, while Gemini was an outlier. While there was high variability in the middle ranking, the extremes (top and bottom) showed stable consensus.

**inb4 questions of “why didn’t you just A/B test a bunch of pitch variants”. Yes, that would’ve been useful too, but I’d need volume for reliable results (which would take time) and Beehiiv (my ESP) doesn’t make signup-page A/B tests easy. Also, my goal here was fit and clarity, not pure conversion rate.

How did you like the new format of this newsletter?(super appreciate if you leave feedback after voting) |

THAT’S ALL FOR THIS WEEK

Was this email forwarded to you? Sign up here. Want to get in front of 20,000 AI enthusiasts? Work with me. This newsletter is written & shipped by Dario Chincha. |

Affiliate disclosure: To cover the cost of my email software and the time I spend writing this newsletter, I sometimes link to products and other newsletters. Please assume these are affiliate links. If you choose to subscribe to a newsletter or buy a product through any of my links then THANK YOU – it will make it possible for me to continue to do this.