- What's brewing in AI

- Posts

- 🧙🏼 Rescuing my critical thinking skills

🧙🏼 Rescuing my critical thinking skills

Using AI correctly is not enough

Rescuing my critical thinking skills

Using AI gives an illusion of learning. Here’s my plan to stay sharp.

Was this email forwarded to you? Sign up here.

AI has made me more productive than ever.

But if you’re feeling dumber from using AI sometimes, you’re not alone.

Some people want you to think it’s a skill issue: that if you just use it the right way, then there’s no negative effects.

They seem to ignore that the medium itself has effects beyond how you use it.

My last email (me, realising I’m overusing AI), struck a chord with many of you. I received an unexpected amount of DMs from people saying they experience the same phenomenon, “I needed to hear this”, etc.

In this email:

What we’re missing out on when using AI

Why keeping our critical thinking sharp is more important than ever

Why using AI correctly is not enough

What I’m doing to balance out the downsides of using AI

My brain’s natural superpowers

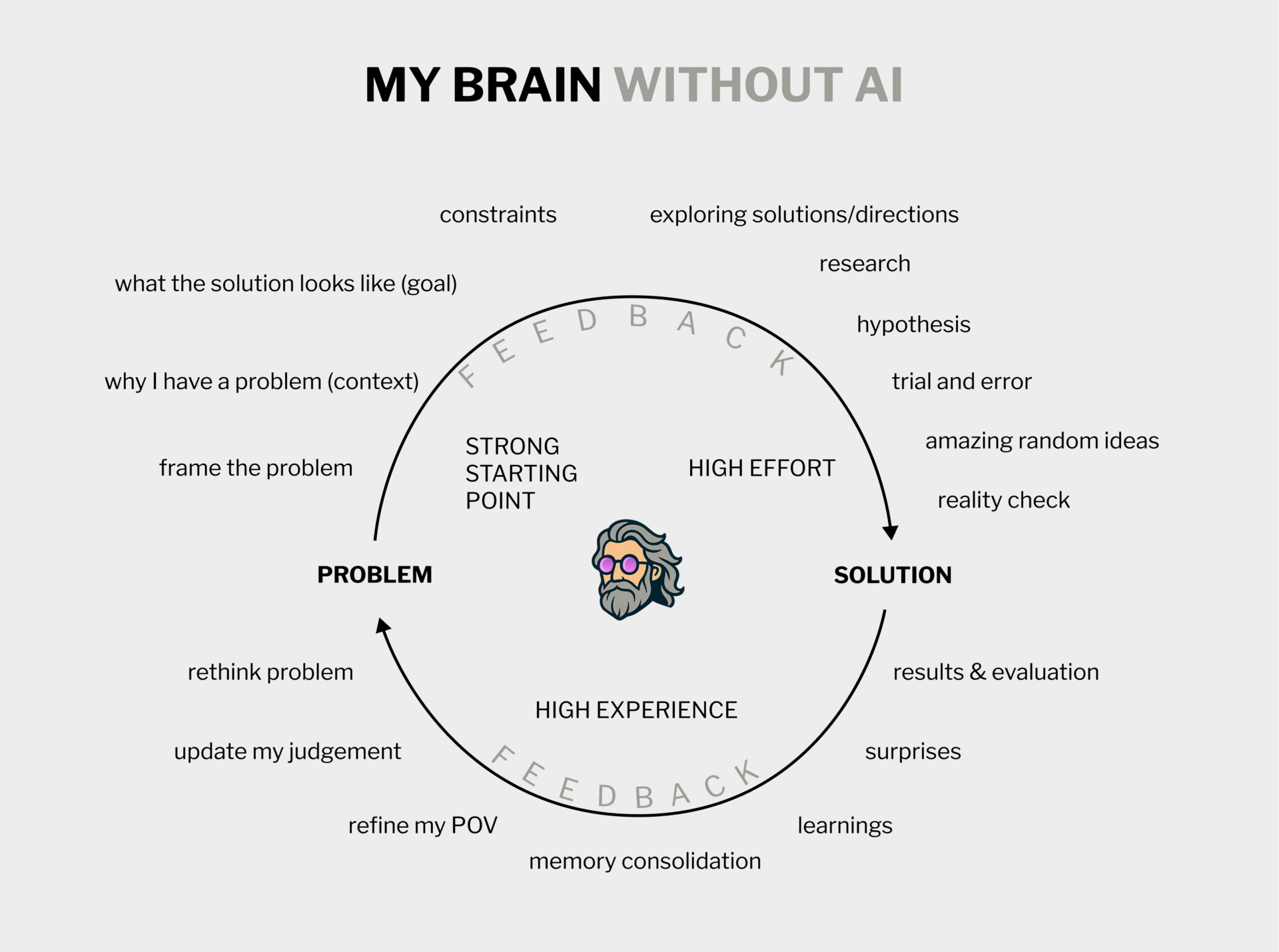

A simplified mental map of how my brain tackles a problem

As a first step—to identify what we’re missing out on when using AI, I find it helpful to remind myself of how my brain works without AI:

It has a clear sense of why I have a problem, why I want to solve it and what the end goal looks like

It explores solutions, specific directions and makes a thesis about which solution is right

There’s a feedback loop in which trial and error from attempting to solve the problem refines my understanding of the world, how I observe, and my point of view. Solutions inform judgement.

It enjoys the process of learning new things, exploring ideas, directions and solutions. And I’ve noticed that in that process, I often also get some great random ideas apart from the answer I set out to get.

AI is cool, but impacts our critical thinking

Emerging research is showing AI causes a cognitive debt.

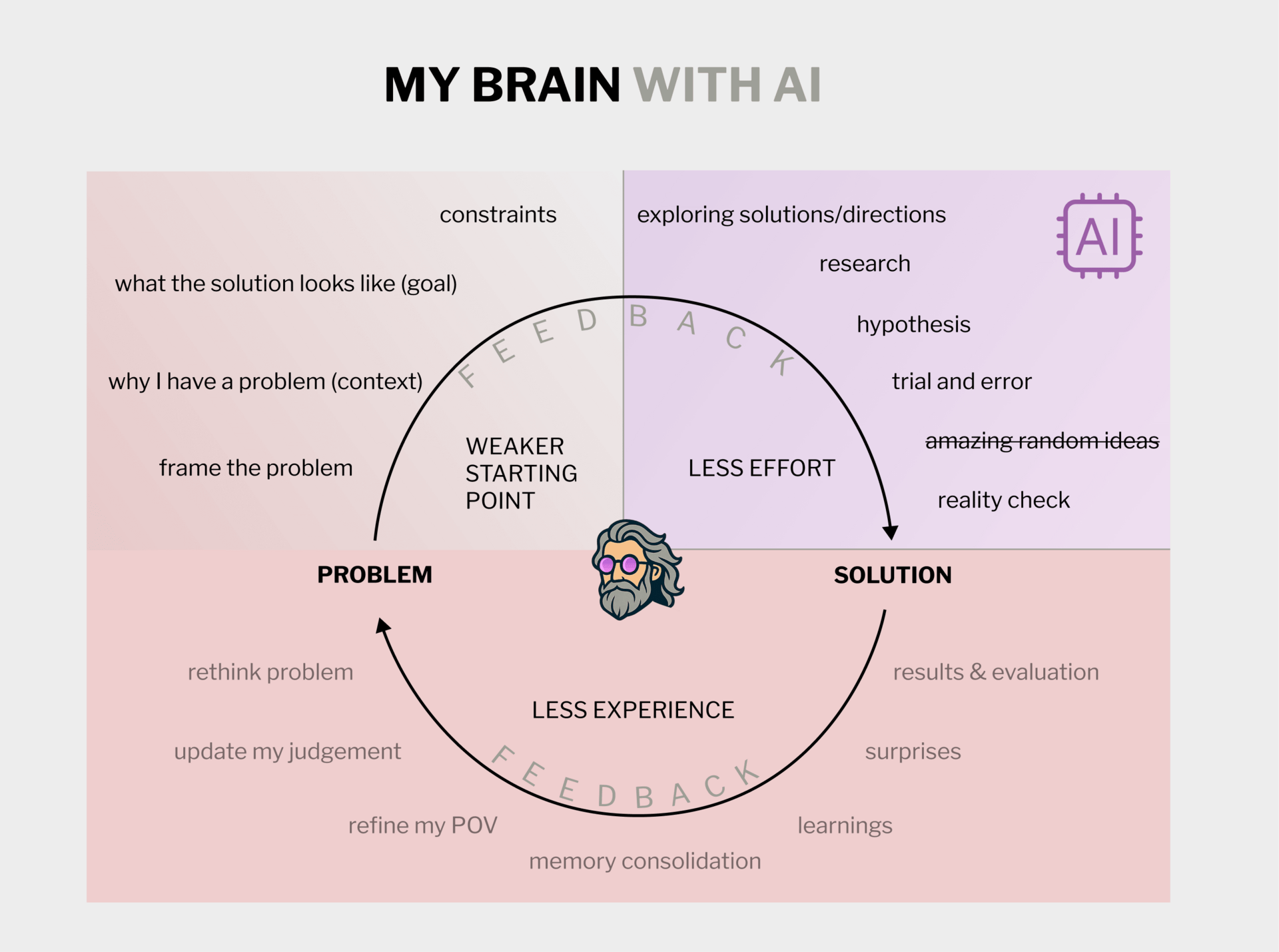

My intuition is that using AI all the time—what some CEOs call reflexive use of AI—subtly encourages cutting off our cognitive process at mere problem awareness. We miss out on a lot of the effort we typically have to do to get the result, which means less experience go back into our memory networks which, paradoxically, over time leads to a weaker starting point for using AI.

If that explanation went above your head, worry not because this meme illustrates it perfectly (I personally choked on my coffee at “what are my needs?”).

I looked into the research, and here’s why we still need a sharp head in an AI-first world:

Effortful learning builds metacognition; when it’s underdeveloped, we’re prone to automation bias and AI more easily misleads us and inflates confidence.

LLMs aren’t really capable of advanced reasoning, though they give off an illusion of thinking.

Because they’re trained to produce likely-sounding text, LLMs can be very persuasive, even when they’re wrong.

Autonomous systems will likely need human supervisors for years to come.

And without solid judgement and decision-making skills (our starting point for using AI), LLMs won’t create value and they won’t drive innovation.

By defaulting to AI for every problem, we avoid the kind of effortful learning that builds experience, and lose joy and serendipity in the process. We also become less able to make AI helpful and more prone to errors and deception.

Armed with that understanding, I’m now actually motivated to not take the shortcut of AI all the time.

IN PARTNERSHIP WITH RUBRIK

With 82% of cyberattacks targeting cloud environments where your critical data lives, every second after a hit counts. Join top IT and security leaders on December 10 to learn strategies for turning worst-case scenarios into minor incidents. Discover how to bounce back in hours, not weeks.

How you use AI isn’t everything

Influential people, like Nvidia’s CEO Jensen Huang, want you to believe that it’s only about how you use it.

He makes the argument that if you just ask AI to teach you things you don’t know or solve problems you couldn’t otherwise solve, you’re actually enhancing your cognitive skills.

This makes it sound like we’re dealing with a skill issue.

It’s a seductive argument, but it ignores that learning itself requires effort.

By using AI, you're still bypassing a large area of brain processing which normally requires high effort on your end.

Understanding something new quickly can be extremely useful, but it also gives you an illusion of learning.

And as you know, these models aren’t actually thinking for you, they’re juggling probabilities.

While you think you’re learning, the opposite thing might be happening, given that our critical thinking skills atrophy when we rely on AI.

The irony is complete: We are under the illusion of learning by models that give us an illusion of thinking, and the critical thinking needed to steer them is vanishing in the process.

The three steps I’m taking

I personally notice a low-key reluctance to think when I know AI can just give me the answer (ref. my keyboard story).

I want to heal that.

Recognising that AI, as a medium, subtly conditions me to outsource my thinking feels empowering and seems like the obvious first step to improving my relationship with it.

Here’s my recovery plan:

Keeping score. From now on, I’ll use AI with the awareness that I’m taking a shortcut. To illustrate this, I’ll use the metaphor of going somewhere and having the choice between walking or taking the car. If I take the car all the time, I need to make sure to balance that choice with enough exercise elsewhere. Likewise, I’ll use AI knowing that I’m building up a cognitive debt. No harm in that—as long as I pay it off with enough real, non-AI assisted learning elsewhere.

Showing up with a hypothesis. When I use AI on a subject I actually seek to understand, I’ll do my homework first. I’ll reason through it as far as I can, exploring solutions and creating a hypothesis in my head—before starting to prompt. It’s easy to stop my thought process when I feel like I’ll have a “good enough” prompt to get the job done; the idea is to discipline myself to go one step further—before asking AI. This way, I’m deliberately leaning into the territory which AI would otherwise handle for me (the purple quadrant of my illustration above). The idea is that I’ll still get some of the mental exercise while using AI, comparable to riding an e-bike versus taking the car.

Going offline. I’ll do a no-tech day every so often, e.g. every two weeks. No computers. And especially no LLMs. This is the measure I’m least excited about, which probably means it’s also the most effective one.

These measures recognise that AI as a medium conditions certain behaviors—outsourcing effort and cognitive processes that build our understanding.

Ironically, creating something valuable with AI requires us to maintain those same behaviours that we’re often outsourcing.

You can't fix that with better prompts.

These measures make sense to me personally, at this point in time. Everyone should approach this individually.

The bigger picture is this: stay willing to contemplate how AI affects you.

THAT’S ALL FOR THIS WEEK

I’m curious—

Have you to noticed any downsides from using AI? If yes, what’s one thing you’re doing about it?

If you haven’t noticed anything, I want to hear that too.

Your POV is valuable to me! Hit reply or leave a comment in the poll below.

Was this email forwarded to you? Sign up here. Want to get in front of 21,000+ AI builders and enthusiasts? Work with me. This newsletter is written & shipped by Dario Chincha. |

What's your verdict on today's email? |

Affiliate disclosure: To cover the cost of my email software and the time I spend writing this newsletter, I sometimes link to products and other newsletters. Please assume these are affiliate links. If you choose to subscribe to a newsletter or buy a product through any of my links then THANK YOU – it will make it possible for me to continue to do this.