- What's brewing in AI

- Posts

- 🧙🏼 What keeps me curious

🧙🏼 What keeps me curious

Give yourself permission to tinker

What keeps me curious

Give yourself permission to tinker

Was this email forwarded to you? Sign up here.

Welcome to the 200th edition of this newsletter. I've published a newsletter on average every 4.6 days for the last 924 days.

Today, I'll tell you why I keep writing about AI. The thing that keeps it interesting for me.

It all started with an appreciation and enthusiasm for how much more productive AI has allowed me to be. I try to share everything I discover to be useful about AI in this newsletter, even when I have doubts about whether it's the "best way" to do it.

That's not always easy.

Incredibly smart people out there are also sharing what they know. Whatever the topic, there's someone with a PhD in it who's sharing stuff that methodically and conceptually is lightyears ahead of what I had in mind.

Oftentimes, I read their tweets and articles and newsletters and think to myself I should simply stop sharing.

Let me give you an example.

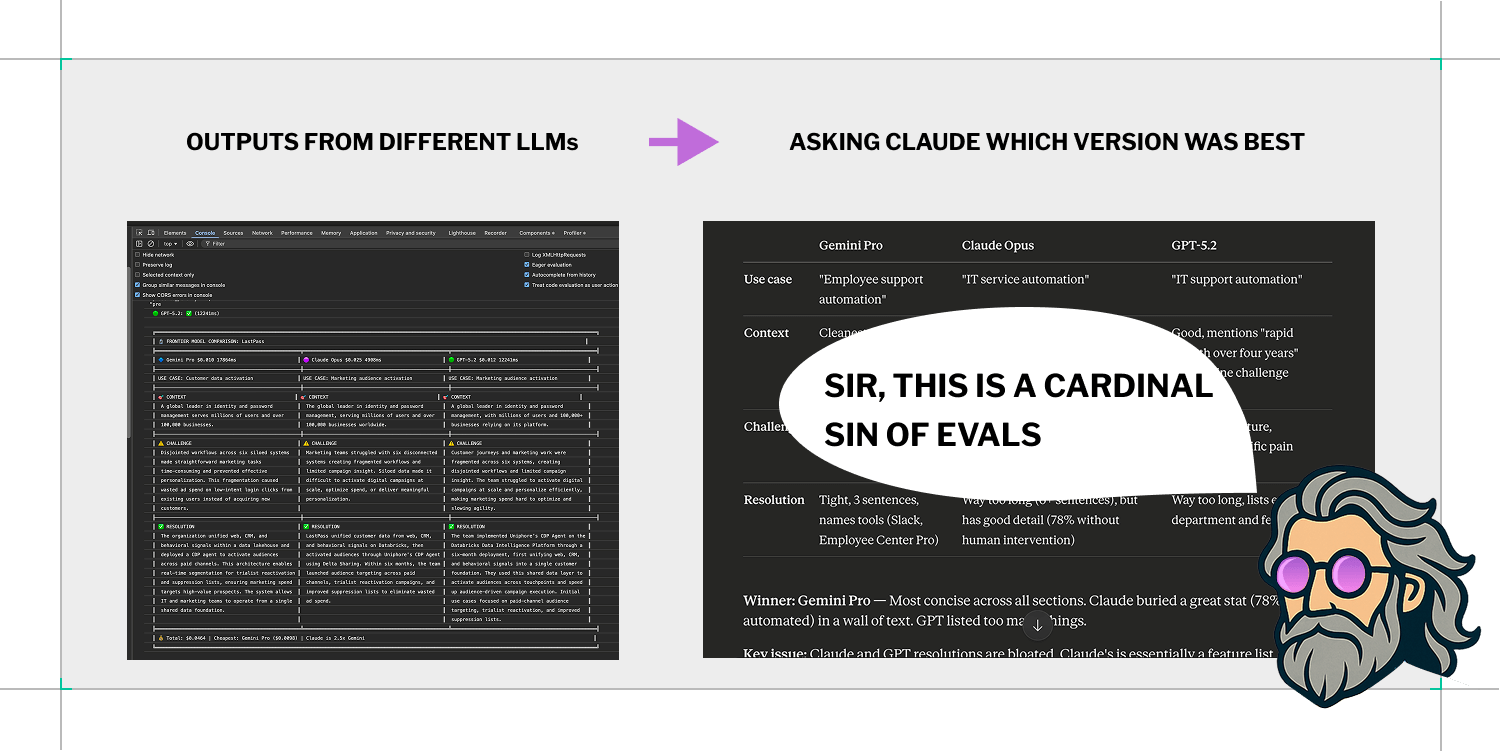

This week I was going to write about a handy method I did for deciding which LLM is best for a given task.

I was building a pipeline that would generate thousands of summaries without me in the loop. I needed to pick the right model. So I got API keys from several providers, had Claude Code test the summarization across several models, compare outputs side by side, and then tell me which of them performed best.

It surprised me how simple it was to do this with Claude Code. I wanted to share it.

I spent a couple of hours drafting a newsletter post about how to set this up in detail, creating visuals for it, the whole thing.

Then I realised that what I was doing is what people call "evals"—evaluations. I Googled it. First link: a tutorial by an ML engineer with 20+ years of experience who's trained OpenAI staff on this exact topic.

One of his points was that you need to be deeply involved in the evaluation process yourself. You can't just outsource the judgment to another AI.

So here I was, about to send out a newsletter about my method, which apparently skips a step that experts consider essential.

I scrapped the draft.

Then I paused.

IN PARTNERSHIP WITH BRAINGRID

Claude Code, Cursor, Lovable, Replit. AI can build apps fast. But a few hours in, once you start adding real features, things fall apart.

One small change breaks three other things. Context gets lost. Features stop working. You're not sure why.

The problem isn't the AI. It's that you skipped the planning.

BrainGrid is the Product Management Agent for AI coding. It turns vague ideas into clear specs and maps your UX flows. It asks the clarifying questions you forgot to ask and breaks work into structured tasks that AI tools can actually build correctly.

Instead of vibe coding your way into a fragile prototype, BrainGrid helps you plan first, so you ship features that work, scale, and stay fixed.

If you're serious about building real products, not just prototypes, this is what's missing.

❦

I thought about it, and realised that the workflow I did this "wrong" thing on actually works damn well. Those summaries serve a specific purpose, and having read through hundreds of them afterwards, I can say they serve it excellently.

Maybe it works because I had a clear picture of what I wanted and read through the analysis carefully before accepting it.

Maybe, for my specific situation, letting AI help with the evaluation just wasn't such a silly method after all. Maybe the silliest thing was second-guessing myself after I'd already gotten a great result.

I'm building tools where "good enough" is the right standard; the one that keeps me moving and learning. That tutorial I found, it’s great if you need that level of process. I needed results.

So here’s a lesson about AI I keep learning over and over again:

Relying on experts to tell you how to use AI can become a liability. Not because they're wrong. They're usually not.

But because AI is non-deterministic and filled with abstractions.

To me, it's what makes AI interesting.

If this space were purely rule-based, I'd find it boring. What keeps me curious is that AI adapts to each person, context and problem. There's no single right way. That means everyone who engages with it seriously has the opportunity to discover something new.

The curiosity to explore AI freely is what gradually builds your intuition: the skill which allows you to make sense of real-world situations where you don’t have a map.

It's so common to see people shouting do it my way and packaging AI into fixed solutions. Buy this prompt pack. Follow this framework. You're doing it wrong.

Dogmatic approaches are getting all the spotlight and I think that's a shame.

Two hundred editions in, that's what keeps me writing.

Not to bring you answers, but to encourage you to tinker with this thing, and see where it takes you.

Dario

Was this email forwarded to you? Sign up here.

Want to get in front of 21,000+ AI builders and enthusiasts? Work with me.

This newsletter is written & shipped by Dario Chincha.

Disclosure: To cover the cost of my email software and the time I spend writing this newsletter, I sometimes work with sponsors and may earn a commission if you buy something through a link in here. If you choose to click, subscribe, or buy through any of them, THANK YOU – it will make it possible for me to continue to do this.

What's your verdict on today's email? |